This is an old revision of the document!

Table of Contents

nginx with PHP-FPM

refer:

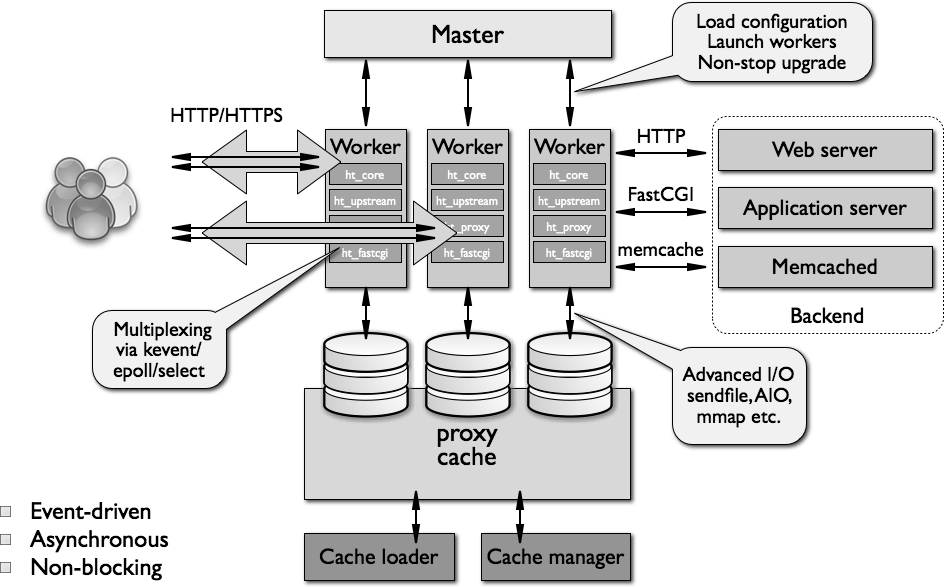

Overview of nginx Architecture

Traditional process- or thread-based models of handling concurrent connections involve handling each connection with a separate process or thread, and blocking on network or input/output operations. Depending on the application, it can be very inefficient in terms of memory and CPU consumption:

- Spawning a separate process or thread requires preparation of a new runtime environment, including allocation of heap and stack memory, and the creation of a new execution context.

- Additional CPU time is also spent creating these items, which can eventually lead to poor performance due to thread thrashing on excessive context switching.

- All of these complications manifest themselves in older web server architectures like Apache's. This is a tradeoff between offering a rich set of generally applicable features and optimized usage of server resources.

From the very beginning, nginx was meant to be a specialized tool to achieve more performance, density and economical use of server resources while enabling dynamic growth of a website, so it has followed a different model. It was actually inspired by the ongoing development of advanced event-based mechanisms in a variety of operating systems. What resulted is a modular, event-driven, asynchronous, single-threaded, non-blocking architecture which became the foundation of nginx code.

nginx uses multiplexing and event notifications heavily, and dedicates specific tasks to separate processes. Connections are processed in a highly efficient run-loop in a limited number of single-threaded processes called workers. Within each worker nginx can handle many thousands of concurrent connections and requests per second.

Nginx Vs Apache

- Nginx is based on event-driven architecture. Apache is based on process-driven architecture. It is interesting to note that Apache in its earliest release was not having multitasking architecture. Later Apache MPM (multi-processing module) was added to achieve this.

- Nginx doesn’t create a new process for a new request. Apache creates a new process for each request.

- In Nginx, memory consumption is very low for serving static pages. But, Apache’s nature of creating new process for each request increases the memory consumption.

- Several benchmarking results indicates that when compared to Apache, Nginx is extremely fast for serving static pages.

- Nginx development started only in 2002. But Apache initial release was in 1995.

- In complex configurations situation, when compared to Nginx, Apache can be configured easily as it comes with lot of configuration features to cover wide range of requirements.

- When compared to Nginx, Apache has excellent documentation.

- In general, Nginx have less components to add more features. But Apache has tons of features and provides lot more functionality than Nginx.

- Nginx do not support Operating Systems like OpenVMS and IBMi. But Apache supports much wider range of Operating Systems.

- Since Nginx comes only with core features that are required for a web server, it is lightweight when compared to Apache.

- The performance and scalability of Nginx is not completely dependent on hardware resources, whereas the performance and scalability of the Apache is dependent on underlying hardware resources like memory and CPU.

Basic Nginx Configuration

Some basic directives:

- Location (refer: https://docs.nginx.com/nginx/admin-guide/basic-functionality/managing-configuration-files/)

user nobody; # a directive in the 'main' context events { # configuration of connection processing } http { # Configuration specific to HTTP and affecting all virtual servers server { # configuration of HTTP virtual server 1 location /one { # configuration for processing URIs starting with '/one' } location /two { # configuration for processing URIs starting with '/two' } } server { # configuration of HTTP virtual server 2 } } stream { # Configuration specific to TCP/UDP and affecting all virtual servers server { # configuration of TCP virtual server 1 } }

- request_filename (refer: https://docs.nginx.com/nginx/admin-guide/web-server/web-server/)

if (!-e $request_filename) { rewrite ^ /path/index.php last; }⇒ This checks whether there is a file, directory, or symbolic link that matches the $request_filename. If such a file is not found, the connection is being redirected to /path/index.php.

- try_files (refer https://docs.nginx.com/nginx/admin-guide/web-server/serving-static-content/)

location / { try_files $uri $uri/ $uri.html =404; }location / { try_files $uri $uri/ /test/index.html; }⇒ you probably understand:

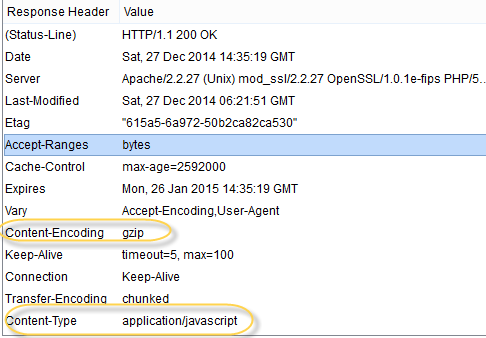

- location / ⇒ matches all locations * try_files $uri try $uri first, for example http://example.com/images/image.jpg nginx will try to check if there's a file inside /images called image.jpg if found it will serve it first. * $uri/ which means if you didn't find the first condition $uri try the URI as a directory ===== Optimize nginx configuration for performance and benchmark ===== refer: * http://www.freshblurbs.com/blog/2015/11/28/high-load-nginx-config.html * http://tweaked.io/guide/nginx/ * http://wiki.nginx.org/FullExample * http://wiki.nginx.org/Configuration ==== General Tunning ==== nginx uses a fixed number of workers, each of which handles incoming requests. The general rule of thumb is that you should have one worker for each CPU-core your server contains. You can count the CPUs available to your system by running:<code bash> $ grep ^processor /proc/cpuinfo | wc -l </code> With a quad-core processor this would give you a configuration that started like so:<code> # One worker per CPU-core. worker_processes 4; events { worker_connections 8192; multi_accept on; use epoll; } worker_rlimit_nofile 40000; http { sendfile on; tcp_nopush on; tcp_nodelay on; keepalive_timeout 15; # Your content here .. } </code> Here we've also raised the worker_connections setting, which specifies how many connections each worker process can handle. The maximum number of connections your server can process is the result of:<code> worker_processes * worker_connections (= 32384 in our example). </code> ==== compress static contents ==== One of the first things that many people try to do is to enable the gzip compression module available with nginx. The intention here is that the objects which the server sends to requesting clients will be smaller, and thus faster to send → good for low network bandwidth However this involves the trade-off common to tuning, performing the compression takes CPU resources from your server, which frequently means that you'd be better off not enabling it at all. Generally the best approach with compression is to only enable it for large files, and to avoid compressing things that are unlikely to be reduced in size (such as images, executables, and similar binary files). To compress static contents, nginx must be built which support module: –with-http_gzip_static_module With above idea in mind the following is a sensible configuration: Edit /usr/local/nginx/conf/nginx.conf: <code> gzip on; gzip_vary on; gzip_min_length 10240; gzip_comp_level 2; gzip_proxied expired no-cache no-store private auth; gzip_types text/plain text/css text/xml text/javascript application/x-javascript application/xml; gzip_disable “MSIE [1-6]\.”; </code> This enables compression for files that are over 10k, aren't being requested by early versions of Microsoft's Internet Explorer, and only attempts to compress text-based files. To check gzip was enabled, we check the response header from web browser(we use httpfox for checking the header):

==== Nginx Request / Upload Max Body Size (client_max_body_size) ====

If you want to allow users upload something or upload personally something over the HTTP then you should maybe increase post size. It can be done with client_max_body_size value inside http {…}. On default it’s 1 Mb, but it can be set example to 20 Mb and also increase buffer size with following configuration :<code>

client_max_body_size 20m;

client_body_buffer_size 128k;

</code>

If you get following error, then you know that client_max_body_size is too low:<code>

“Request Entity Too Large” (413)

</code>

==== Nginx Cache Control for Static Files (Browser Cache Control Directives) ====

Off access log of static files and cache browser side 360 days:

<code>

location ~* \.(jpg|jpeg|gif|png|css|js|ico|xml)$ {

access_log off;

log_not_found off;

expires 360d;

}

</code>

==== Run benchmark for checking optimize effect ====

Check slow css,js or images and run benchmark

<code bash>

ab -n 20 -c 4 http://shop.babies.vn/media/js/af1ae2e07ff564e3d1499f7eb7aecdf9.js

</code>

* benchmark on windows:<code>

ab -n 20 -c 4 http://shop.babies.vn/media/js/af1ae2e07ff564e3d1499f7eb7aecdf9.js

Percentage of the requests served within a certain time (ms)

50% 16166

66% 17571

75% 17925

80% 18328

90% 22019

95% 26190

98% 26190

99% 26190

100% 26190 (longest request)

</code>

* benchmark on local linux(web hosting):<code>

ab -n 100 -c 20 http://shop.babies.vn/media/js/af1ae2e07ff564e3d1499f7eb7aecdf9.js

Percentage of the requests served within a certain time (ms)

50% 8

66% 8

75% 8

80% 8

90% 8

95% 13

98% 13

99% 14

100% 14 (longest request)

</code>

* benchmark load speed from other countries from http://www.webpagetest.org/

⇒ with above benchmarch for static file ab -n 20 -c 4 http://shop.babies.vn/media/js/af1ae2e07ff564e3d1499f7eb7aecdf9.js(size 400k), we can see the problem for performance because the network or bandwidth

===== Nginx Rewrite Rules and Regular Expression =====

refer: https://blog.nginx.org/blog/creating-nginx-rewrite-rules

<code>

# —————————————————————————————–

# ~ : Enable regex mode for location (in regex ~ mean case-sensitive match)

# ~* : case-insensitive match

# | : Or

# () : Match group or evaluate the content of ()

# $ : the expression must be at the end of the evaluated text

# (no char/text after the match) $ is usually used at the end of a regex

# location expression.

# ? : Check for zero or one occurrence of the previous char ex jpe?g

# ^~ : The match must be at the beginning of the text, note that nginx will not perform

# any further regular expression match even if an other match is available

# (check the table above); ^ indicate that the match must be at the start of

# the uri text, while ~ indicates a regular expression match mode.

# example (location ^~ /realestate/.*)

# Nginx evaluation exactly this as don't check regexp locations if this

# location is longest prefix match.

# = : Exact match, no sub folders (location = /)

# ^ : Match the beginning of the text (opposite of $). By itself, ^ is a

# shortcut for all paths (since they all have a beginning).

# .* : Match zero, one or more occurrence of any char

# \ : Escape the next char

# . : Any char

# * : Match zero, one or more occurrence of the previous char

# ! : Not (negative look ahead)

# {} : Match a specific number of occurrence ex. [0-9]{3} match 342 but not 32

# {2,4} match length of 2, 3 and 4

# + : Match one or more occurrence of the previous char

# [] : Match any char inside

# ——————————————————————————————–

</code>

===== PHP-FPM Config and Optimize =====

refer:

* https://www.if-not-true-then-false.com/2011/nginx-and-php-fpm-configuration-and-optimizing-tips-and-tricks/

* https://tweaked.io/guide/nginx/

global config for all pools:<code>

[global]

; Error log file

; If it's set to “syslog”, log is sent to syslogd instead of being written

; in a local file.

; Note: the default prefix is /usr/local/php/var

; Default Value: log/php-fpm.log

;error_log = log/php-fpm.log

; Log level

; Possible Values: alert, error, warning, notice, debug

; Default Value: notice

;log_level = notice

; The maximum number of processes FPM will fork. This has been design to control

; the global number of processes when using dynamic PM within a lot of pools.

; Use it with caution.

; Note: A value of 0 indicates no limit

; Default Value: 0

; process.max = 128

</code>

pool www config:<code>

; Choose how the process manager will control the number of child processes.

; Possible Values:

; static - a fixed number (pm.max_children) of child processes;

; dynamic - the number of child processes are set dynamically based on the

; following directives. With this process management, there will be

; always at least 1 children.

; pm.max_children - the maximum number of children that can

; be alive at the same time.

; pm.start_servers - the number of children created on startup.

; pm.min_spare_servers - the minimum number of children in 'idle'

; state (waiting to process). If the number

; of 'idle' processes is less than this

; number then some children will be created.

; pm.max_spare_servers - the maximum number of children in 'idle'

; state (waiting to process). If the number

; of 'idle' processes is greater than this

; number then some children will be killed.

; ondemand - no children are created at startup. Children will be forked when

; new requests will connect. The following parameter are used:

; pm.max_children - the maximum number of children that

; can be alive at the same time.

; pm.process_idle_timeout - The number of seconds after which

; an idle process will be killed.

</code>

==== PHP-FPM Global Configuration Tweaks ====

Set up emergency_restart_threshold, emergency_restart_interval and process_control_timeout. Default values for these options are totally off, but I think it’s better use these options example like following in php-fpm.conf(we can off for performance):<code>

emergency_restart_threshold 10

emergency_restart_interval 1m

process_control_timeout 10s

</code>

What this mean? So if 10 PHP-FPM child processes exit with SIGSEGV or SIGBUS within 1 minute*then PHP-FPM restart automatically. This configuration also sets 10 seconds time limit for child processes to wait for a reaction on signals from master.

(In some case, the php-fpm child processes full memory and can't process the request, these configurations will automatically restart the php-fpm child processes)

==== PHP-FPM Pools Configuration ====

=== Basic Config ===

Default php-fpm will use pool [www] to configuration for all site. In advance, it’s possible to use different pools for different sites and allocate resources very accurately and even use different users and groups for every pool. Following is just example configuration files structure for PHP-FPM pools for three different sites (or actually three different part of same site):<code>

/etc/php-fpm.d/site.conf

/etc/php-fpm.d/blog.conf

/etc/php-fpm.d/forums.conf

</code>

Or config in php-fpm.conf<code>

; Relative path can also be used. They will be prefixed by:

; - the global prefix if it's been set (-p argument)

; - /onec/php otherwise

;include=etc/fpm.d/*.conf

</code>(Create directory /onec/php/etc/fpm.d/)

Just example configurations for every pool:

* default pool [www](listen on port 9000)<code>

[www]

; Per pool prefix

; It only applies on the following directives:

; - 'access.log'

; - 'slowlog'

; - 'listen' (unixsocket)

; - 'chroot'

; - 'chdir'

; - 'php_values'

; - 'php_admin_values'

; When not set, the global prefix (or /onec/php) applies instead.

; Note: This directive can also be relative to the global prefix.

; Default Value: none

;prefix = /path/to/pools/$pool

; Unix user/group of processes

; Note: The user is mandatory. If the group is not set, the default user's group

; will be used.

user = nobody

group = nobody

; The address on which to accept FastCGI requests.

; Valid syntaxes are:

; 'ip.add.re.ss:port' - to listen on a TCP socket to a specific IPv4 address on

; a specific port;

; '[ip:6:addr:ess]:port' - to listen on a TCP socket to a specific IPv6 address on

; a specific port;

; 'port' - to listen on a TCP socket to all IPv4 addresses on a

; specific port;

; '[::]:port' - to listen on a TCP socket to all addresses

; (IPv6 and IPv4-mapped) on a specific port;

; '/path/to/unix/socket' - to listen on a unix socket.

; Note: This value is mandatory.

listen = 127.0.0.1:9000

</code>

* /etc/php-fpm.d/site.conf<code>

[site]

listen = 127.0.0.1:9000

user = site

group = site

request_slowlog_timeout = 5s

slowlog = /var/log/php-fpm/slowlog-site.log

listen.allowed_clients = 127.0.0.1

pm = dynamic

pm.max_children = 5

pm.start_servers = 3

pm.min_spare_servers = 2

pm.max_spare_servers = 4

pm.max_requests = 200

listen.backlog = -1

pm.status_path = /status

request_terminate_timeout = 120s

rlimit_files = 131072

rlimit_core = unlimited

catch_workers_output = yes

env[HOSTNAME] = $HOSTNAME

env[TMP] = /tmp

env[TMPDIR] = /tmp

env[TEMP] = /tmp

</code> ⇒pool [site] use port 9000

* /etc/php-fpm.d/blog.conf<code>

[blog]

listen = 127.0.0.1:9001

user = blog

group = blog

request_slowlog_timeout = 5s

slowlog = /var/log/php-fpm/slowlog-blog.log

listen.allowed_clients = 127.0.0.1

pm = dynamic

pm.max_children = 4

pm.start_servers = 2

pm.min_spare_servers = 1

pm.max_spare_servers = 3

pm.max_requests = 200

listen.backlog = -1

pm.status_path = /status

request_terminate_timeout = 120s

rlimit_files = 131072

rlimit_core = unlimited

catch_workers_output = yes

env[HOSTNAME] = $HOSTNAME

env[TMP] = /tmp

env[TMPDIR] = /tmp

env[TEMP] = /tmp

</code>⇒pool [blog] use port 9001

* /etc/php-fpm.d/forums.conf<code>

[forums]

listen = 127.0.0.1:9002

user = forums

group = forums

request_slowlog_timeout = 5s

slowlog = /var/log/php-fpm/slowlog-forums.log

listen.allowed_clients = 127.0.0.1

pm = dynamic

pm.max_children = 10

pm.start_servers = 3

pm.min_spare_servers = 2

pm.max_spare_servers = 4

pm.max_requests = 400

listen.backlog = -1

pm.status_path = /status

request_terminate_timeout = 120s

rlimit_files = 131072

rlimit_core = unlimited

catch_workers_output = yes

env[HOSTNAME] = $HOSTNAME

env[TMP] = /tmp

env[TMPDIR] = /tmp

env[TEMP] = /tmp

</code> ⇒pool [forums] use port 9002

So this is just example howto configure multiple different size pools.

=== Optimize config ===

Example Config:<code bash>

process.max = 15

pm.max_children = 100

pm.start_servers = 10

pm.min_spare_servers = 5

pm.max_spare_servers = 15

pm.max_requests = 1000

</code>

process.max: The maximum number of processes FPM will fork. This has been design to control the global number of processes when using dynamic PM within a lot of pools

The configuration variable pm.max_children controls the maximum amount of FPM processes that can ever run at the same time. This value can be calculate like this :<code>

pm.max_children = total RAM - (500MB) / average process memory

</code>

* To find the average process memory:<code bash>

ps -ylC php-fpm –sort:rss | awk '!/RSS/ { s+=$8 } END { printf “%s\n”, “Total memory used by PHP-FPM child processes: ”; printf “%dM\n”, s/1024 }'

</code>⇒ get total memory used by all php-fpm process base on basic command ps -ylC php-fpm. Then get number of php-fpm processes:<code bash>

ps -ylC php-fpm –sort:rss | grep php-fpm | wc -l

</code>And the average process memory:<code>

Avg Memory = Total Memory/number of process

</code>

* Why 500MB ? Depends of what is running on your system, but you want to keep memory for nginx (about 20MB), MySql and others services.

Other configs:

* pm.start_servers: The number of children created on startup. Value must be between pm.min_spare_servers and pm.max_spare_servers.<code>

pm.start_servers = (pm.max_spare_servers + pm.min_spare_servers)/2

</code>

* pm.max_requests: We want to keep it hight to prevent server respawn. Note: If you have a memory leak in your PHP code decrease this value to recreate it quickly and free the memory**.

==== Nginx Request / Upload Max Body Size (client_max_body_size) ====

If you want to allow users upload something or upload personally something over the HTTP then you should maybe increase post size. It can be done with client_max_body_size value inside http {…}. On default it’s 1 Mb, but it can be set example to 20 Mb and also increase buffer size with following configuration :<code>

client_max_body_size 20m;

client_body_buffer_size 128k;

</code>

If you get following error, then you know that client_max_body_size is too low:<code>

“Request Entity Too Large” (413)

</code>

==== Nginx Cache Control for Static Files (Browser Cache Control Directives) ====

Off access log of static files and cache browser side 360 days:

<code>

location ~* \.(jpg|jpeg|gif|png|css|js|ico|xml)$ {

access_log off;

log_not_found off;

expires 360d;

}

</code>

==== Run benchmark for checking optimize effect ====

Check slow css,js or images and run benchmark

<code bash>

ab -n 20 -c 4 http://shop.babies.vn/media/js/af1ae2e07ff564e3d1499f7eb7aecdf9.js

</code>

* benchmark on windows:<code>

ab -n 20 -c 4 http://shop.babies.vn/media/js/af1ae2e07ff564e3d1499f7eb7aecdf9.js

Percentage of the requests served within a certain time (ms)

50% 16166

66% 17571

75% 17925

80% 18328

90% 22019

95% 26190

98% 26190

99% 26190

100% 26190 (longest request)

</code>

* benchmark on local linux(web hosting):<code>

ab -n 100 -c 20 http://shop.babies.vn/media/js/af1ae2e07ff564e3d1499f7eb7aecdf9.js

Percentage of the requests served within a certain time (ms)

50% 8

66% 8

75% 8

80% 8

90% 8

95% 13

98% 13

99% 14

100% 14 (longest request)

</code>

* benchmark load speed from other countries from http://www.webpagetest.org/

⇒ with above benchmarch for static file ab -n 20 -c 4 http://shop.babies.vn/media/js/af1ae2e07ff564e3d1499f7eb7aecdf9.js(size 400k), we can see the problem for performance because the network or bandwidth

===== Nginx Rewrite Rules and Regular Expression =====

refer: https://blog.nginx.org/blog/creating-nginx-rewrite-rules

<code>

# —————————————————————————————–

# ~ : Enable regex mode for location (in regex ~ mean case-sensitive match)

# ~* : case-insensitive match

# | : Or

# () : Match group or evaluate the content of ()

# $ : the expression must be at the end of the evaluated text

# (no char/text after the match) $ is usually used at the end of a regex

# location expression.

# ? : Check for zero or one occurrence of the previous char ex jpe?g

# ^~ : The match must be at the beginning of the text, note that nginx will not perform

# any further regular expression match even if an other match is available

# (check the table above); ^ indicate that the match must be at the start of

# the uri text, while ~ indicates a regular expression match mode.

# example (location ^~ /realestate/.*)

# Nginx evaluation exactly this as don't check regexp locations if this

# location is longest prefix match.

# = : Exact match, no sub folders (location = /)

# ^ : Match the beginning of the text (opposite of $). By itself, ^ is a

# shortcut for all paths (since they all have a beginning).

# .* : Match zero, one or more occurrence of any char

# \ : Escape the next char

# . : Any char

# * : Match zero, one or more occurrence of the previous char

# ! : Not (negative look ahead)

# {} : Match a specific number of occurrence ex. [0-9]{3} match 342 but not 32

# {2,4} match length of 2, 3 and 4

# + : Match one or more occurrence of the previous char

# [] : Match any char inside

# ——————————————————————————————–

</code>

===== PHP-FPM Config and Optimize =====

refer:

* https://www.if-not-true-then-false.com/2011/nginx-and-php-fpm-configuration-and-optimizing-tips-and-tricks/

* https://tweaked.io/guide/nginx/

global config for all pools:<code>

[global]

; Error log file

; If it's set to “syslog”, log is sent to syslogd instead of being written

; in a local file.

; Note: the default prefix is /usr/local/php/var

; Default Value: log/php-fpm.log

;error_log = log/php-fpm.log

; Log level

; Possible Values: alert, error, warning, notice, debug

; Default Value: notice

;log_level = notice

; The maximum number of processes FPM will fork. This has been design to control

; the global number of processes when using dynamic PM within a lot of pools.

; Use it with caution.

; Note: A value of 0 indicates no limit

; Default Value: 0

; process.max = 128

</code>

pool www config:<code>

; Choose how the process manager will control the number of child processes.

; Possible Values:

; static - a fixed number (pm.max_children) of child processes;

; dynamic - the number of child processes are set dynamically based on the

; following directives. With this process management, there will be

; always at least 1 children.

; pm.max_children - the maximum number of children that can

; be alive at the same time.

; pm.start_servers - the number of children created on startup.

; pm.min_spare_servers - the minimum number of children in 'idle'

; state (waiting to process). If the number

; of 'idle' processes is less than this

; number then some children will be created.

; pm.max_spare_servers - the maximum number of children in 'idle'

; state (waiting to process). If the number

; of 'idle' processes is greater than this

; number then some children will be killed.

; ondemand - no children are created at startup. Children will be forked when

; new requests will connect. The following parameter are used:

; pm.max_children - the maximum number of children that

; can be alive at the same time.

; pm.process_idle_timeout - The number of seconds after which

; an idle process will be killed.

</code>

==== PHP-FPM Global Configuration Tweaks ====

Set up emergency_restart_threshold, emergency_restart_interval and process_control_timeout. Default values for these options are totally off, but I think it’s better use these options example like following in php-fpm.conf(we can off for performance):<code>

emergency_restart_threshold 10

emergency_restart_interval 1m

process_control_timeout 10s

</code>

What this mean? So if 10 PHP-FPM child processes exit with SIGSEGV or SIGBUS within 1 minute*then PHP-FPM restart automatically. This configuration also sets 10 seconds time limit for child processes to wait for a reaction on signals from master.

(In some case, the php-fpm child processes full memory and can't process the request, these configurations will automatically restart the php-fpm child processes)

==== PHP-FPM Pools Configuration ====

=== Basic Config ===

Default php-fpm will use pool [www] to configuration for all site. In advance, it’s possible to use different pools for different sites and allocate resources very accurately and even use different users and groups for every pool. Following is just example configuration files structure for PHP-FPM pools for three different sites (or actually three different part of same site):<code>

/etc/php-fpm.d/site.conf

/etc/php-fpm.d/blog.conf

/etc/php-fpm.d/forums.conf

</code>

Or config in php-fpm.conf<code>

; Relative path can also be used. They will be prefixed by:

; - the global prefix if it's been set (-p argument)

; - /onec/php otherwise

;include=etc/fpm.d/*.conf

</code>(Create directory /onec/php/etc/fpm.d/)

Just example configurations for every pool:

* default pool [www](listen on port 9000)<code>

[www]

; Per pool prefix

; It only applies on the following directives:

; - 'access.log'

; - 'slowlog'

; - 'listen' (unixsocket)

; - 'chroot'

; - 'chdir'

; - 'php_values'

; - 'php_admin_values'

; When not set, the global prefix (or /onec/php) applies instead.

; Note: This directive can also be relative to the global prefix.

; Default Value: none

;prefix = /path/to/pools/$pool

; Unix user/group of processes

; Note: The user is mandatory. If the group is not set, the default user's group

; will be used.

user = nobody

group = nobody

; The address on which to accept FastCGI requests.

; Valid syntaxes are:

; 'ip.add.re.ss:port' - to listen on a TCP socket to a specific IPv4 address on

; a specific port;

; '[ip:6:addr:ess]:port' - to listen on a TCP socket to a specific IPv6 address on

; a specific port;

; 'port' - to listen on a TCP socket to all IPv4 addresses on a

; specific port;

; '[::]:port' - to listen on a TCP socket to all addresses

; (IPv6 and IPv4-mapped) on a specific port;

; '/path/to/unix/socket' - to listen on a unix socket.

; Note: This value is mandatory.

listen = 127.0.0.1:9000

</code>

* /etc/php-fpm.d/site.conf<code>

[site]

listen = 127.0.0.1:9000

user = site

group = site

request_slowlog_timeout = 5s

slowlog = /var/log/php-fpm/slowlog-site.log

listen.allowed_clients = 127.0.0.1

pm = dynamic

pm.max_children = 5

pm.start_servers = 3

pm.min_spare_servers = 2

pm.max_spare_servers = 4

pm.max_requests = 200

listen.backlog = -1

pm.status_path = /status

request_terminate_timeout = 120s

rlimit_files = 131072

rlimit_core = unlimited

catch_workers_output = yes

env[HOSTNAME] = $HOSTNAME

env[TMP] = /tmp

env[TMPDIR] = /tmp

env[TEMP] = /tmp

</code> ⇒pool [site] use port 9000

* /etc/php-fpm.d/blog.conf<code>

[blog]

listen = 127.0.0.1:9001

user = blog

group = blog

request_slowlog_timeout = 5s

slowlog = /var/log/php-fpm/slowlog-blog.log

listen.allowed_clients = 127.0.0.1

pm = dynamic

pm.max_children = 4

pm.start_servers = 2

pm.min_spare_servers = 1

pm.max_spare_servers = 3

pm.max_requests = 200

listen.backlog = -1

pm.status_path = /status

request_terminate_timeout = 120s

rlimit_files = 131072

rlimit_core = unlimited

catch_workers_output = yes

env[HOSTNAME] = $HOSTNAME

env[TMP] = /tmp

env[TMPDIR] = /tmp

env[TEMP] = /tmp

</code>⇒pool [blog] use port 9001

* /etc/php-fpm.d/forums.conf<code>

[forums]

listen = 127.0.0.1:9002

user = forums

group = forums

request_slowlog_timeout = 5s

slowlog = /var/log/php-fpm/slowlog-forums.log

listen.allowed_clients = 127.0.0.1

pm = dynamic

pm.max_children = 10

pm.start_servers = 3

pm.min_spare_servers = 2

pm.max_spare_servers = 4

pm.max_requests = 400

listen.backlog = -1

pm.status_path = /status

request_terminate_timeout = 120s

rlimit_files = 131072

rlimit_core = unlimited

catch_workers_output = yes

env[HOSTNAME] = $HOSTNAME

env[TMP] = /tmp

env[TMPDIR] = /tmp

env[TEMP] = /tmp

</code> ⇒pool [forums] use port 9002

So this is just example howto configure multiple different size pools.

=== Optimize config ===

Example Config:<code bash>

process.max = 15

pm.max_children = 100

pm.start_servers = 10

pm.min_spare_servers = 5

pm.max_spare_servers = 15

pm.max_requests = 1000

</code>

process.max: The maximum number of processes FPM will fork. This has been design to control the global number of processes when using dynamic PM within a lot of pools

The configuration variable pm.max_children controls the maximum amount of FPM processes that can ever run at the same time. This value can be calculate like this :<code>

pm.max_children = total RAM - (500MB) / average process memory

</code>

* To find the average process memory:<code bash>

ps -ylC php-fpm –sort:rss | awk '!/RSS/ { s+=$8 } END { printf “%s\n”, “Total memory used by PHP-FPM child processes: ”; printf “%dM\n”, s/1024 }'

</code>⇒ get total memory used by all php-fpm process base on basic command ps -ylC php-fpm. Then get number of php-fpm processes:<code bash>

ps -ylC php-fpm –sort:rss | grep php-fpm | wc -l

</code>And the average process memory:<code>

Avg Memory = Total Memory/number of process

</code>

* Why 500MB ? Depends of what is running on your system, but you want to keep memory for nginx (about 20MB), MySql and others services.

Other configs:

* pm.start_servers: The number of children created on startup. Value must be between pm.min_spare_servers and pm.max_spare_servers.<code>

pm.start_servers = (pm.max_spare_servers + pm.min_spare_servers)/2

</code>

* pm.max_requests: We want to keep it hight to prevent server respawn. Note: If you have a memory leak in your PHP code decrease this value to recreate it quickly and free the memory**.

Nginx Security

refer:

- List nginx security issues: http://nginx.org/en/security_advisories.html